- Aug 12, 2017

-

-

Sean Owen authored

## What changes were proposed in this pull request? This is trivial, but bugged me. We should download software over HTTPS. And we can use RAT 0.12 while at it to pick up bug fixes. ## How was this patch tested? N/A Author: Sean Owen <sowen@cloudera.com> Closes #18927 from srowen/Rat012.

-

- Aug 11, 2017

-

-

Stavros Kontopoulos authored

Fixes --packages flag for the stand-alone case in cluster mode. Adds to the driver classpath the jars that are resolved via ivy along with any other jars passed to `spark.jars`. Jars not resolved by ivy are downloaded explicitly to a tmp folder on the driver node. Similar code is available in SparkSubmit so we refactored part of it to use it at the DriverWrapper class which is responsible for launching driver in standalone cluster mode. Note: In stand-alone mode `spark.jars` contains the user jar so it can be fetched later on at the executor side. Manually by submitting a driver in cluster mode within a standalone cluster and checking if dependencies were resolved at the driver side. Author: Stavros Kontopoulos <st.kontopoulos@gmail.com> Closes #18630 from skonto/fix_packages_stand_alone_cluster.

-

Tejas Patil authored

[SPARK-19122][SQL] Unnecessary shuffle+sort added if join predicates ordering differ from bucketing and sorting order ## What changes were proposed in this pull request? Jira : https://issues.apache.org/jira/browse/SPARK-19122 `leftKeys` and `rightKeys` in `SortMergeJoinExec` are altered based on the ordering of join keys in the child's `outputPartitioning`. This is done everytime `requiredChildDistribution` is invoked during query planning. ## How was this patch tested? - Added new test case - Existing tests Author: Tejas Patil <tejasp@fb.com> Closes #16985 from tejasapatil/SPARK-19122_join_order_shuffle.

-

Tejas Patil authored

## What changes were proposed in this pull request? [SPARK-21595](https://issues.apache.org/jira/browse/SPARK-21595) reported that there is excessive spilling to disk due to default spill threshold for `ExternalAppendOnlyUnsafeRowArray` being quite small for WINDOW operator. Old behaviour of WINDOW operator (pre https://github.com/apache/spark/pull/16909) would hold data in an array for first 4096 records post which it would switch to `UnsafeExternalSorter` and start spilling to disk after reaching `spark.shuffle.spill.numElementsForceSpillThreshold` (or earlier if there was paucity of memory due to excessive consumers). Currently the (switch from in-memory to `UnsafeExternalSorter`) and (`UnsafeExternalSorter` spilling to disk) for `ExternalAppendOnlyUnsafeRowArray` is controlled by a single threshold. This PR aims to separate that to have more granular control. ## How was this patch tested? Added unit tests Author: Tejas Patil <tejasp@fb.com> Closes #18843 from tejasapatil/SPARK-21595.

-

LucaCanali authored

Add an option to the JDBC data source to initialize the environment of the remote database session ## What changes were proposed in this pull request? This proposes an option to the JDBC datasource, tentatively called " sessionInitStatement" to implement the functionality of session initialization present for example in the Sqoop connector for Oracle (see https://sqoop.apache.org/docs/1.4.6/SqoopUserGuide.html#_oraoop_oracle_session_initialization_statements ) . After each database session is opened to the remote DB, and before starting to read data, this option executes a custom SQL statement (or a PL/SQL block in the case of Oracle). See also https://issues.apache.org/jira/browse/SPARK-21519 ## How was this patch tested? Manually tested using Spark SQL data source and Oracle JDBC Author: LucaCanali <luca.canali@cern.ch> Closes #18724 from LucaCanali/JDBC_datasource_sessionInitStatement.

-

Kent Yao authored

## What changes were proposed in this pull request? 1. In Spark Web UI, the Details for Stage Page don't have a navigation bar at the bottom. When we drop down to the bottom, it is better for us to see a navi bar right there to go wherever we what. 2. Executor ID is not equivalent to Host, it may be better to separate them, and then we can group the tasks by Hosts . ## How was this patch tested? manually test  Please review http://spark.apache.org/contributing.html before opening a pull request. Author: Kent Yao <yaooqinn@hotmail.com> Closes #18893 from yaooqinn/SPARK-21675.

-

- Aug 10, 2017

-

-

Reynold Xin authored

## What changes were proposed in this pull request? This patch removes the unused SessionCatalog.getTableMetadataOption and ExternalCatalog. getTableOption. ## How was this patch tested? Removed the test case. Author: Reynold Xin <rxin@databricks.com> Closes #18912 from rxin/remove-getTableOption.

-

Peng Meng authored

## What changes were proposed in this pull request? When train RF model, there are many warning messages like this: > WARN RandomForest: Tree learning is using approximately 268492800 bytes per iteration, which exceeds requested limit maxMemoryUsage=268435456. This allows splitting 2622 nodes in this iteration. This warning message is unnecessary and the data is not accurate. Actually, if all the nodes cannot split in one iteration, it will show this warning. For most of the case, all the nodes cannot split just in one iteration, so for most of the case, it will show this warning for each iteration. ## How was this patch tested? The existing UT Author: Peng Meng <peng.meng@intel.com> Closes #18868 from mpjlu/fixRFwarning.

-

Adrian Ionescu authored

## What changes were proposed in this pull request? This patch introduces an internal interface for tracking metrics and/or statistics on data on the fly, as it is being written to disk during a `FileFormatWriter` job and partially reimplements SPARK-20703 in terms of it. The interface basically consists of 3 traits: - `WriteTaskStats`: just a tag for classes that represent statistics collected during a `WriteTask` The only constraint it adds is that the class should be `Serializable`, as instances of it will be collected on the driver from all executors at the end of the `WriteJob`. - `WriteTaskStatsTracker`: a trait for classes that can actually compute statistics based on tuples that are processed by a given `WriteTask` and eventually produce a `WriteTaskStats` instance. - `WriteJobStatsTracker`: a trait for classes that act as containers of `Serializable` state that's necessary for instantiating `WriteTaskStatsTracker` on executors and finally process the resulting collection of `WriteTaskStats`, once they're gathered back on the driver. Potential future use of this interface is e.g. CBO stats maintenance during `INSERT INTO table ... ` operations. ## How was this patch tested? Existing tests for SPARK-20703 exercise the new code: `hive/SQLMetricsSuite`, `sql/JavaDataFrameReaderWriterSuite`, etc. Author: Adrian Ionescu <adrian@databricks.com> Closes #18884 from adrian-ionescu/write-stats-tracker-api.

-

- Aug 09, 2017

-

-

bravo-zhang authored

## What changes were proposed in this pull request? Currently `df.na.replace("*", Map[String, String]("NULL" -> null))` will produce exception. This PR enables passing null/None as value in the replacement map in DataFrame.replace(). Note that the replacement map keys and values should still be the same type, while the values can have a mix of null/None and that type. This PR enables following operations for example: `df.na.replace("*", Map[String, String]("NULL" -> null))`(scala) `df.na.replace("*", Map[Any, Any](60 -> null, 70 -> 80))`(scala) `df.na.replace('Alice', None)`(python) `df.na.replace([10, 20])`(python, replacing with None is by default) One use case could be: I want to replace all the empty strings with null/None because they were incorrectly generated and then drop all null/None data `df.na.replace("*", Map("" -> null)).na.drop()`(scala) `df.replace(u'', None).dropna()`(python) ## How was this patch tested? Scala unit test. Python doctest and unit test. Author: bravo-zhang <mzhang1230@gmail.com> Closes #18820 from bravo-zhang/spark-14932. -

peay authored

## What changes were proposed in this pull request? This modification increases the timeout for `serveIterator` (which is not dynamically configurable). This fixes timeout issues in pyspark when using `collect` and similar functions, in cases where Python may take more than a couple seconds to connect. See https://issues.apache.org/jira/browse/SPARK-21551 ## How was this patch tested? Ran the tests. cc rxin Author: peay <peay@protonmail.com> Closes #18752 from peay/spark-21551.

-

Jose Torres authored

## What changes were proposed in this pull request? Push filter predicates through EventTimeWatermark if they're deterministic and do not reference the watermarked attribute. (This is similar but not identical to the logic for pushing through UnaryNode.) ## How was this patch tested? unit tests Author: Jose Torres <joseph-torres@databricks.com> Closes #18790 from joseph-torres/SPARK-21587.

-

gatorsmile authored

## What changes were proposed in this pull request? This PR is to add the spark version info in the table metadata. When creating the table, this value is assigned. It can help users find which version of Spark was used to create the table. ## How was this patch tested? N/A Author: gatorsmile <gatorsmile@gmail.com> Closes #18709 from gatorsmile/addVersion.

-

Takeshi Yamamuro authored

## What changes were proposed in this pull request? This pr updated `lz4-java` to the latest (v1.4.0) and removed custom `LZ4BlockInputStream`. We currently use custom `LZ4BlockInputStream` to read concatenated byte stream in shuffle. But, this functionality has been implemented in the latest lz4-java (https://github.com/lz4/lz4-java/pull/105). So, we might update the latest to remove the custom `LZ4BlockInputStream`. Major diffs between the latest release and v1.3.0 in the master are as follows (https://github.com/lz4/lz4-java/compare/62f7547abb0819d1ca1e669645ee1a9d26cd60b0...6d4693f56253fcddfad7b441bb8d917b182efa2d); - fixed NPE in XXHashFactory similarly - Don't place resources in default package to support shading - Fixes ByteBuffer methods failing to apply arrayOffset() for array-backed - Try to load lz4-java from java.library.path, then fallback to bundled - Add ppc64le binary - Add s390x JNI binding - Add basic LZ4 Frame v1.5.0 support - enable aarch64 support for lz4-java - Allow unsafeInstance() for ppc64le archiecture - Add unsafeInstance support for AArch64 - Support 64-bit JNI build on Solaris - Avoid over-allocating a buffer - Allow EndMark to be incompressible for LZ4FrameInputStream. - Concat byte stream ## How was this patch tested? Existing tests. Author: Takeshi Yamamuro <yamamuro@apache.org> Closes #18883 from maropu/SPARK-21276.

-

vinodkc authored

## What changes were proposed in this pull request? Resources in Core - SparkSubmitArguments.scala, Spark-launcher - AbstractCommandBuilder.java, resource-managers- YARN - Client.scala are released ## How was this patch tested? No new test cases added, Unit test have been passed Author: vinodkc <vinod.kc.in@gmail.com> Closes #18880 from vinodkc/br_fixresouceleak.

-

10087686 authored

[SPARK-21663][TESTS] test("remote fetch below max RPC message size") should call masterTracker.stop() in MapOutputTrackerSuite Signed-off-by: 10087686 <wang.jiaochunzte.com.cn> ## What changes were proposed in this pull request? After Unit tests end,there should be call masterTracker.stop() to free resource; (Please fill in changes proposed in this fix) ## How was this patch tested? Run Unit tests; (Please explain how this patch was tested. E.g. unit tests, integration tests, manual tests) (If this patch involves UI changes, please attach a screenshot; otherwise, remove this) Please review http://spark.apache.org/contributing.html before opening a pull request. Author: 10087686 <wang.jiaochun@zte.com.cn> Closes #18867 from wangjiaochun/mapout. -

WeichenXu authored

## What changes were proposed in this pull request? Update breeze to 0.13.1 for an emergency bugfix in strong wolfe line search https://github.com/scalanlp/breeze/pull/651 ## How was this patch tested? N/A Author: WeichenXu <WeichenXu123@outlook.com> Closes #18797 from WeichenXu123/update-breeze.

-

Anderson Osagie authored

## What changes were proposed in this pull request? Currently, each application and each worker creates their own proxy servlet. Each proxy servlet is backed by its own HTTP client and a relatively large number of selector threads. This is excessive but was fixed (to an extent) by https://github.com/apache/spark/pull/18437. However, a single HTTP client (backed by a single selector thread) should be enough to handle all proxy requests. This PR creates a single proxy servlet no matter how many applications and workers there are. ## How was this patch tested? . The unit tests for rewriting proxied locations and headers were updated. I then spun up a 100 node cluster to ensure that proxy'ing worked correctly jiangxb1987 Please let me know if there's anything else I can do to help push this thru. Thanks! Author: Anderson Osagie <osagie@gmail.com> Closes #18499 from aosagie/fix/minimize-proxy-threads.

-

pgandhi authored

The executor tab on Spark UI page shows task as completed when an executor process that is running that task is killed using the kill command. Added the case ExecutorLostFailure which was previously not there, thus, the default case would be executed in which case, task would be marked as completed. This case will consider all those cases where executor connection to Spark Driver was lost due to killing the executor process, network connection etc. ## How was this patch tested? Manually Tested the fix by observing the UI change before and after. Before: <img width="1398" alt="screen shot-before" src="https://user-images.githubusercontent.com/22228190/28482929-571c9cea-6e30-11e7-93dd-728de5cdea95.png"> After: <img width="1385" alt="screen shot-after" src="https://user-images.githubusercontent.com/22228190/28482964-8649f5ee-6e30-11e7-91bd-2eb2089c61cc.png"> Please review http://spark.apache.org/contributing.html before opening a pull request. Author: pgandhi <pgandhi@yahoo-inc.com> Author: pgandhi999 <parthkgandhi9@gmail.com> Closes #18707 from pgandhi999/master.

-

Xingbo Jiang authored

## What changes were proposed in this pull request? Window rangeBetween() API should allow literal boundary, that means, the window range frame can calculate frame of double/date/timestamp. Example of the use case can be: ``` SELECT val_timestamp, cate, avg(val_timestamp) OVER(PARTITION BY cate ORDER BY val_timestamp RANGE BETWEEN CURRENT ROW AND interval 23 days 4 hours FOLLOWING) FROM testData ``` This PR refactors the Window `rangeBetween` and `rowsBetween` API, while the legacy user code should still be valid. ## How was this patch tested? Add new test cases both in `DataFrameWindowFunctionsSuite` and in `window.sql`. Author: Xingbo Jiang <xingbo.jiang@databricks.com> Closes #18814 from jiangxb1987/literal-boundary.

-

- Aug 08, 2017

-

-

Shixiong Zhu authored

## What changes were proposed in this pull request? When I was investigating a flaky test, I realized that many places don't check the return value of `HDFSMetadataLog.get(batchId: Long): Option[T]`. When a batch is supposed to be there, the caller just ignores None rather than throwing an error. If some bug causes a query doesn't generate a batch metadata file, this behavior will hide it and allow the query continuing to run and finally delete metadata logs and make it hard to debug. This PR ensures that places calling HDFSMetadataLog.get always check the return value. ## How was this patch tested? Jenkins Author: Shixiong Zhu <shixiong@databricks.com> Closes #18799 from zsxwing/SPARK-21596.

-

Sean Owen authored

## What changes were proposed in this pull request? Taking over https://github.com/apache/spark/pull/18789 ; Closes #18789 Update Jackson to 2.6.7 uniformly, and some components to 2.6.7.1, to get some fixes and prep for Scala 2.12 ## How was this patch tested? Existing tests Author: Sean Owen <sowen@cloudera.com> Closes #18881 from srowen/SPARK-20433.

-

Marcelo Vanzin authored

Author: Marcelo Vanzin <vanzin@cloudera.com> Closes #18886 from vanzin/SPARK-21671.

-

Marcelo Vanzin authored

This change adds an in-memory implementation of KVStore that can be used by the live UI. The implementation is not fully optimized, neither for speed nor space, but should be fast enough for using in the listener bus. Author: Marcelo Vanzin <vanzin@cloudera.com> Closes #18395 from vanzin/SPARK-20655.

-

hyukjinkwon authored

## What changes were proposed in this pull request? This PR proposes to use https://rversions.r-pkg.org/r-release-win instead of https://rversions.r-pkg.org/r-release to check R's version for Windows correctly. We met a syncing problem with Windows release (see #15709) before. To cut this short, it was ... - 3.3.2 release was released but not for Windows for few hours. - `https://rversions.r-pkg.org/r-release` returns the latest as 3.3.2 and the download link for 3.3.1 becomes `windows/base/old` by our script - 3.3.2 release for WIndows yet - 3.3.1 is still not in `windows/base/old` but `windows/base` as the latest - Failed to download with `windows/base/old` link and builds were broken I believe this problem is not only what we met. Please see https://github.com/krlmlr/r-appveyor/commit/01ce943929993bbf27facd2cdc20ae2e03808eb4 and also this `r-release-win` API came out between 3.3.1 and 3.3.2 (assuming to deal with this issue), please see `https://github.com/metacran/rversions.app/issues/2`. Using this API will prevent the problem although it looks quite rare assuming from the commit logs in https://github.com/metacran/rversions.app/commits/master. After 3.3.2, both `r-release-win` and `r-release` are being updated together. ## How was this patch tested? AppVeyor tests. Author: hyukjinkwon <gurwls223@gmail.com> Closes #18859 from HyukjinKwon/use-reliable-link.

-

Liang-Chi Hsieh authored

## What changes were proposed in this pull request? If we create a type alias for a type workable with Dataset, the type alias doesn't work with Dataset. A reproducible case looks like: object C { type TwoInt = (Int, Int) def tupleTypeAlias: TwoInt = (1, 1) } Seq(1).toDS().map(_ => ("", C.tupleTypeAlias)) It throws an exception like: type T1 is not a class scala.ScalaReflectionException: type T1 is not a class at scala.reflect.api.Symbols$SymbolApi$class.asClass(Symbols.scala:275) ... This patch accesses the dealias of type in many places in `ScalaReflection` to fix it. ## How was this patch tested? Added test case. Author: Liang-Chi Hsieh <viirya@gmail.com> Closes #18813 from viirya/SPARK-21567. -

Marcos P. Sanchez authored

## What changes were proposed in this pull request? This commit adds a new argument for IllegalArgumentException message. This recent commit added the argument: [https://github.com/apache/spark/commit/dcac1d57f0fd05605edf596c303546d83062a352](https://github.com/apache/spark/commit/dcac1d57f0fd05605edf596c303546d83062a352) ## How was this patch tested? Unit test have been passed Please review http://spark.apache.org/contributing.html before opening a pull request. Author: Marcos P. Sanchez <mpenate@stratio.com> Closes #18862 from mpenate/feature/exception-errorifexists.

-

- Aug 07, 2017

-

-

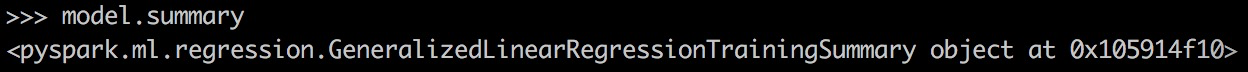

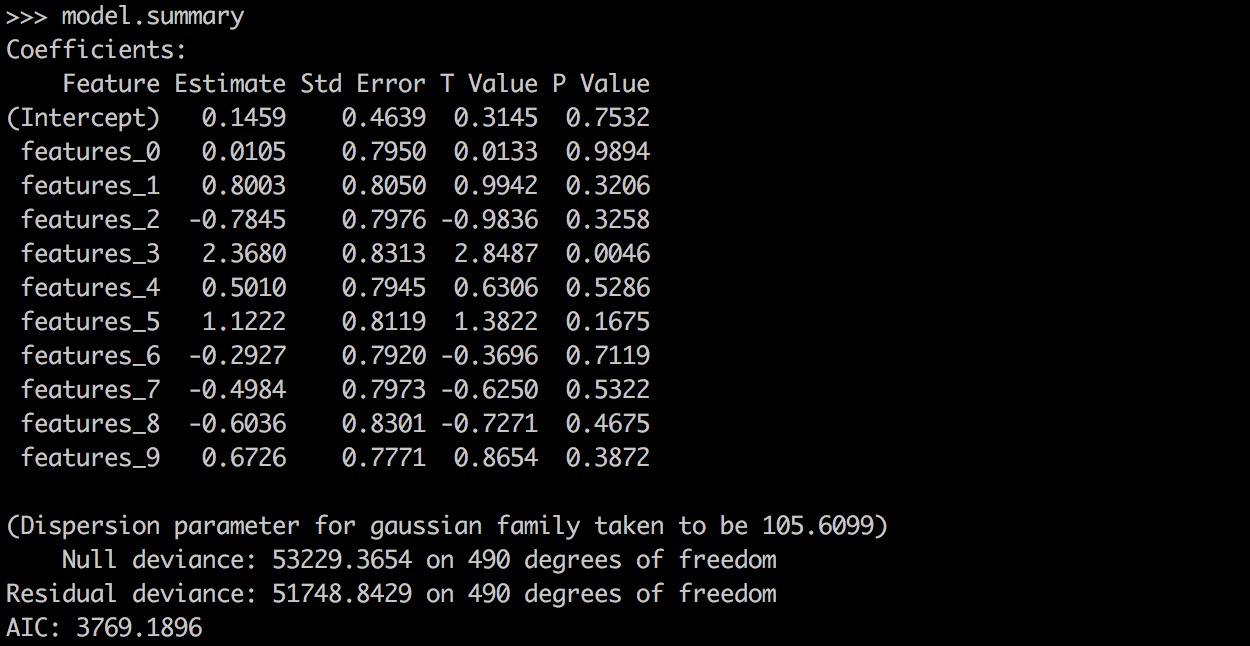

Yanbo Liang authored

## What changes were proposed in this pull request? PySpark GLR ```model.summary``` should return a printable representation by calling Scala ```toString```. ## How was this patch tested? ``` from pyspark.ml.regression import GeneralizedLinearRegression dataset = spark.read.format("libsvm").load("data/mllib/sample_linear_regression_data.txt") glr = GeneralizedLinearRegression(family="gaussian", link="identity", maxIter=10, regParam=0.3) model = glr.fit(dataset) model.summary ``` Before this PR:  After this PR:  Author: Yanbo Liang <ybliang8@gmail.com> Closes #18870 from yanboliang/spark-19270. -

Ajay Saini authored

## What changes were proposed in this pull request? Added DefaultParamsWriteable, DefaultParamsReadable, DefaultParamsWriter, and DefaultParamsReader to Python to support Python-only persistence of Json-serializable parameters. ## How was this patch tested? Instantiated an estimator with Json-serializable parameters (ex. LogisticRegression), saved it using the added helper functions, and loaded it back, and compared it to the original instance to make sure it is the same. This test was both done in the Python REPL and implemented in the unit tests. Note to reviewers: there are a few excess comments that I left in the code for clarity but will remove before the code is merged to master. Author: Ajay Saini <ajays725@gmail.com> Closes #18742 from ajaysaini725/PythonPersistenceHelperFunctions.

-

gatorsmile authored

[SPARK-21648][SQL] Fix confusing assert failure in JDBC source when parallel fetching parameters are not properly provided. ### What changes were proposed in this pull request? ```SQL CREATE TABLE mytesttable1 USING org.apache.spark.sql.jdbc OPTIONS ( url 'jdbc:mysql://${jdbcHostname}:${jdbcPort}/${jdbcDatabase}?user=${jdbcUsername}&password=${jdbcPassword}', dbtable 'mytesttable1', paritionColumn 'state_id', lowerBound '0', upperBound '52', numPartitions '53', fetchSize '10000' ) ``` The above option name `paritionColumn` is wrong. That mean, users did not provide the value for `partitionColumn`. In such case, users hit a confusing error. ``` AssertionError: assertion failed java.lang.AssertionError: assertion failed at scala.Predef$.assert(Predef.scala:156) at org.apache.spark.sql.execution.datasources.jdbc.JdbcRelationProvider.createRelation(JdbcRelationProvider.scala:39) at org.apache.spark.sql.execution.datasources.DataSource.resolveRelation(DataSource.scala:312) ``` ### How was this patch tested? Added a test case Author: gatorsmile <gatorsmile@gmail.com> Closes #18864 from gatorsmile/jdbcPartCol. -

Jose Torres authored

## What changes were proposed in this pull request? Propagate metadata in attribute replacement during streaming execution. This is necessary for EventTimeWatermarks consuming replaced attributes. ## How was this patch tested? new unit test, which was verified to fail before the fix Author: Jose Torres <joseph-torres@databricks.com> Closes #18840 from joseph-torres/SPARK-21565.

-

Mac authored

## What changes were proposed in this pull request? Enhanced some existing documentation Please review http://spark.apache.org/contributing.html before opening a pull request. Author: Mac <maclockard@gmail.com> Closes #18710 from maclockard/maclockard-patch-1.

-

Xiao Li authored

### What changes were proposed in this pull request? author: BoleynSu closes https://github.com/apache/spark/pull/18836 ```Scala val df = Seq((1, 1)).toDF("i", "j") df.createOrReplaceTempView("T") withSQLConf(SQLConf.AUTO_BROADCASTJOIN_THRESHOLD.key -> "-1") { sql("select * from (select a.i from T a cross join T t where t.i = a.i) as t1 " + "cross join T t2 where t2.i = t1.i").explain(true) } ``` The above code could cause the following exception: ``` SortMergeJoinExec should not take Cross as the JoinType java.lang.IllegalArgumentException: SortMergeJoinExec should not take Cross as the JoinType at org.apache.spark.sql.execution.joins.SortMergeJoinExec.outputOrdering(SortMergeJoinExec.scala:100) ``` Our SortMergeJoinExec supports CROSS. We should not hit such an exception. This PR is to fix the issue. ### How was this patch tested? Modified the two existing test cases. Author: Xiao Li <gatorsmile@gmail.com> Author: Boleyn Su <boleyn.su@gmail.com> Closes #18863 from gatorsmile/pr-18836.

-

zhoukang authored

## What changes were proposed in this pull request? **For moudle below:** common/network-common streaming sql/core sql/catalyst **tests.jar will install or deploy twice.Like:** `[DEBUG] Installing org.apache.spark:spark-streaming_2.11/maven-metadata.xml to /home/mi/.m2/repository/org/apache/spark/spark-streaming_2.11/maven-metadata-local.xml [INFO] Installing /home/mi/Work/Spark/scala2.11/spark/streaming/target/spark-streaming_2.11-2.1.0-mdh2.1.0.1-SNAPSHOT-tests.jar to /home/mi/.m2/repository/org/apache/spark/spark-streaming_2.11/2.1.0-mdh2.1.0.1-SNAPSHOT/spark-streaming_2.11-2.1.0-mdh2.1.0.1-SNAPSHOT-tests.jar [DEBUG] Skipped re-installing /home/mi/Work/Spark/scala2.11/spark/streaming/target/spark-streaming_2.11-2.1.0-mdh2.1.0.1-SNAPSHOT-tests.jar to /home/mi/.m2/repository/org/apache/spark/spark-streaming_2.11/2.1.0-mdh2.1.0.1-SNAPSHOT/spark-streaming_2.11-2.1.0-mdh2.1.0.1-SNAPSHOT-tests.jar, seems unchanged` **The reason is below:** `[DEBUG] (f) artifact = org.apache.spark:spark-streaming_2.11:jar:2.1.0-mdh2.1.0.1-SNAPSHOT [DEBUG] (f) attachedArtifacts = [org.apache.spark:spark-streaming_2.11:test-jar:tests:2.1.0-mdh2.1.0.1-SNAPSHOT, org.apache.spark:spark-streaming_2.11:jar:tests:2.1.0-mdh2.1.0.1-SNAPSHOT, org.apache.spark:spark -streaming_2.11:java-source:sources:2.1.0-mdh2.1.0.1-SNAPSHOT, org.apache.spark:spark-streaming_2.11:java-source:test-sources:2.1.0-mdh2.1.0.1-SNAPSHOT, org.apache.spark:spark-streaming_2.11:javadoc:javadoc:2.1.0 -mdh2.1.0.1-SNAPSHOT]` when executing 'mvn deploy' to nexus during release.I will fail since release nexus can not be overrided. ## How was this patch tested? Execute 'mvn clean install -Pyarn -Phadoop-2.6 -Phadoop-provided -DskipTests' Author: zhoukang <zhoukang199191@gmail.com> Closes #18745 from caneGuy/zhoukang/fix-installtwice.

-

Peng Meng authored

## What changes were proposed in this pull request? comments of parentStats in RF are wrong. parentStats is not only used for the first iteration, it is used with all the iteration for unordered features. ## How was this patch tested? Author: Peng Meng <peng.meng@intel.com> Closes #18832 from mpjlu/fixRFDoc.

-

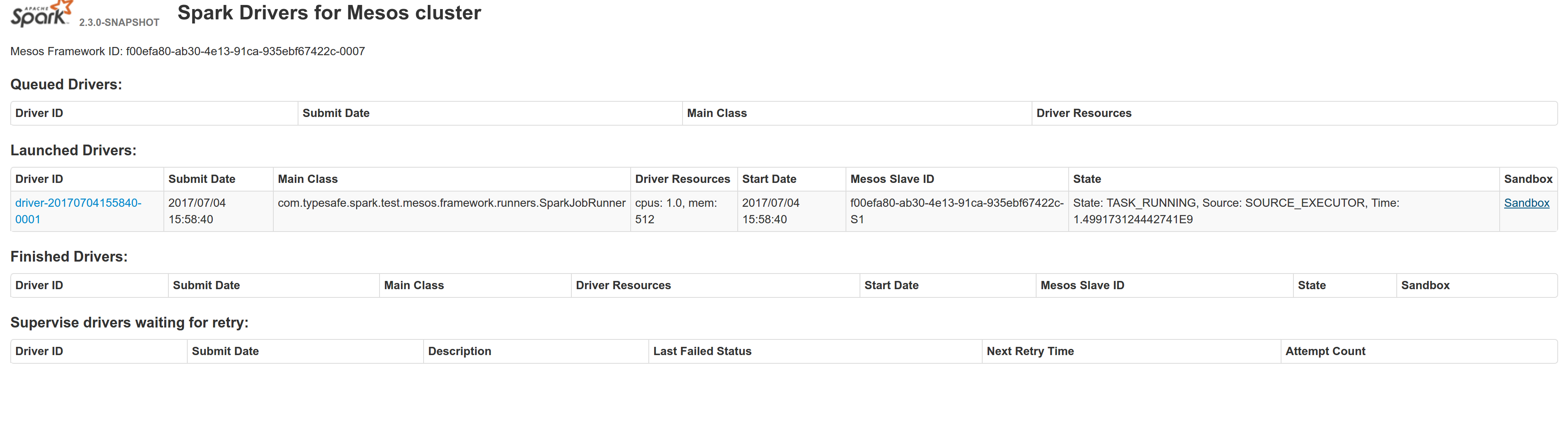

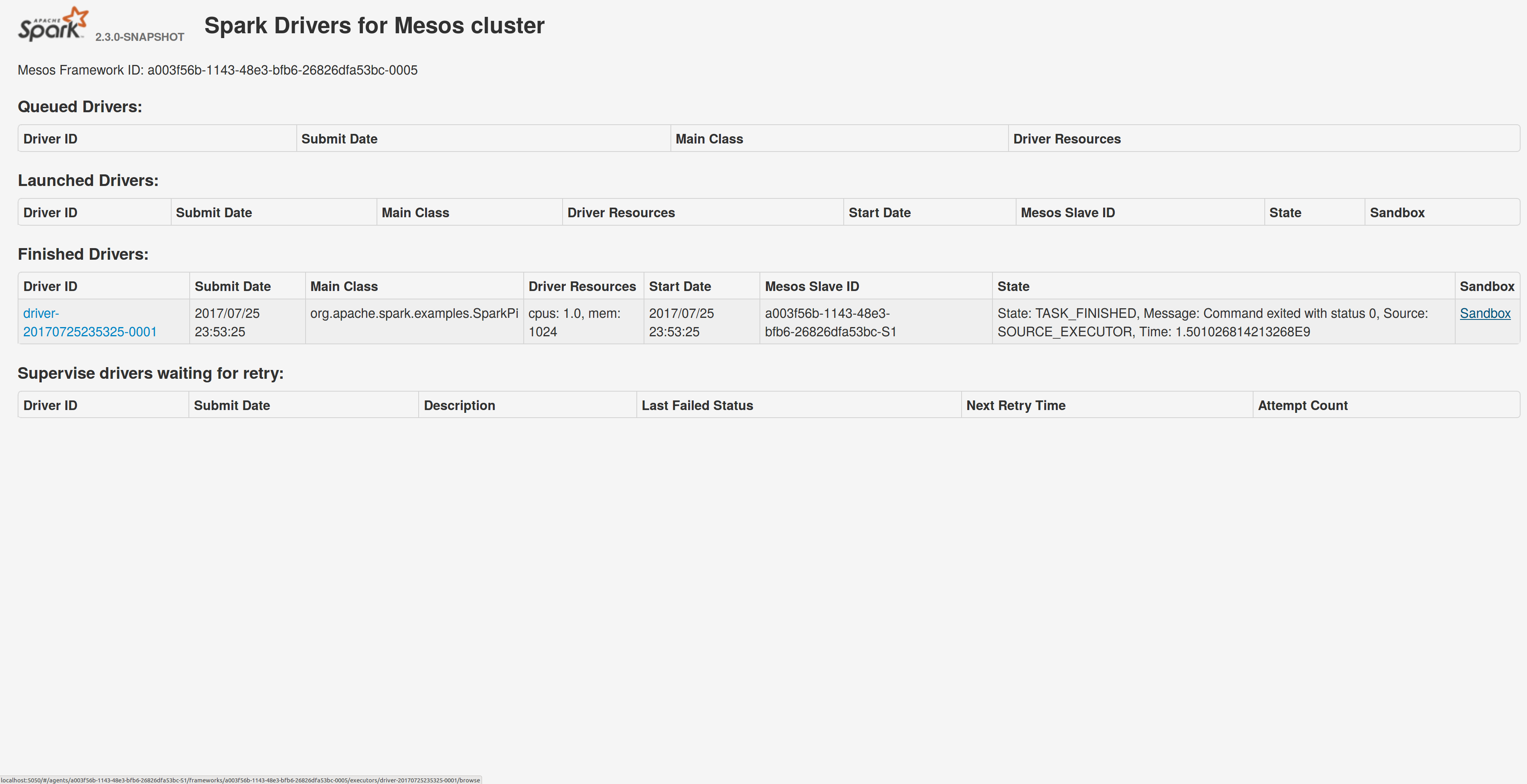

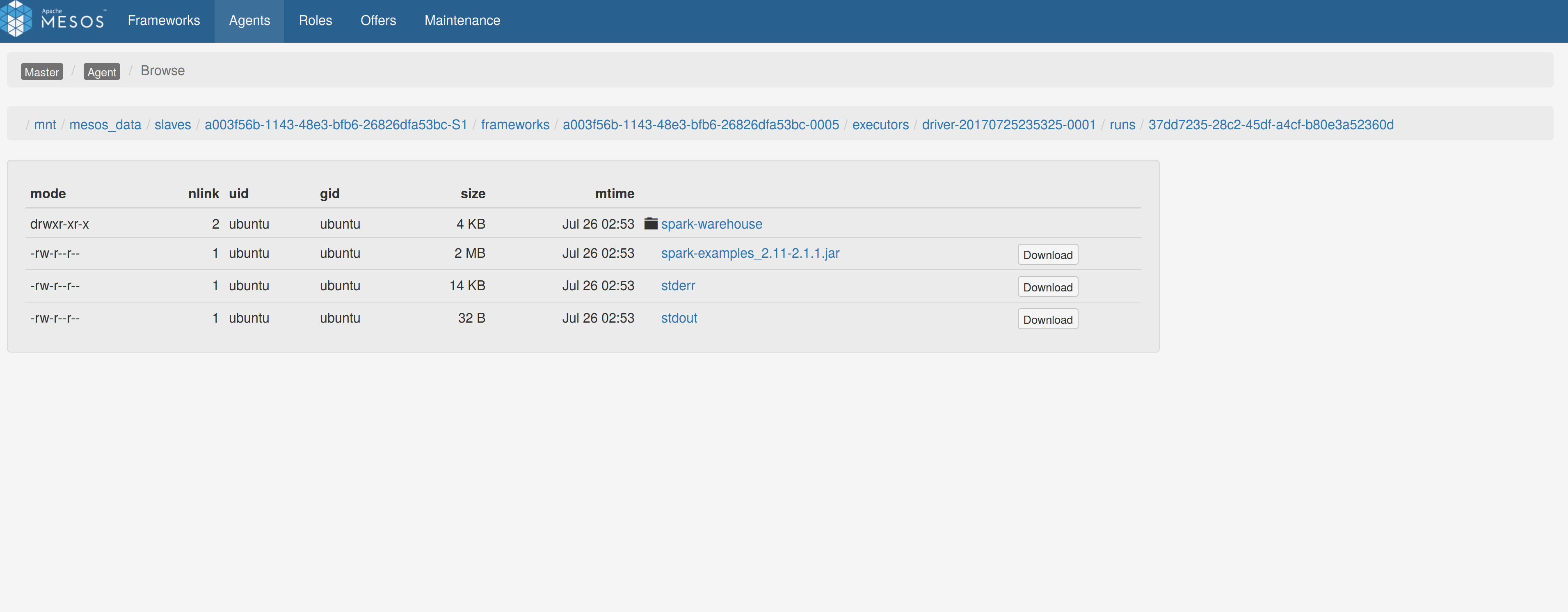

Stavros Kontopoulos authored

## What changes were proposed in this pull request? Adds a sandbox link per driver in the dispatcher ui with minimal changes after a bug was fixed here: https://issues.apache.org/jira/browse/MESOS-4992 The sandbox uri has the following format: http://<proxy_uri>/#/slaves/\<agent-id\>/ frameworks/ \<scheduler-id\>/executors/\<driver-id\>/browse For dc/os the proxy uri is <dc/os uri>/mesos. For the dc/os deployment scenario and to make things easier I introduced a new config property named `spark.mesos.proxy.baseURL` which should be passed to the dispatcher when launched using --conf. If no such configuration is detected then no sandbox uri is depicted, and there is an empty column with a header (this can be changed so nothing is shown). Within dc/os the base url must be a property for the dispatcher that we should add in the future here: https://github.com/mesosphere/universe/blob/9e7c909c3b8680eeb0494f2a58d5746e3bab18c1/repo/packages/S/spark/26/config.json It is not easy to detect in different environments what is that uri so user should pass it. ## How was this patch tested? Tested with the mesos test suite here: https://github.com/typesafehub/mesos-spark-integration-tests. Attached image shows the ui modification where the sandbox header is added.  Tested the uri redirection the way it was suggested here: https://issues.apache.org/jira/browse/MESOS-4992 Built mesos 1.4 from the master branch and started the mesos dispatcher with the command: `./sbin/start-mesos-dispatcher.sh --conf spark.mesos.proxy.baseURL=http://localhost:5050 -m mesos://127.0.0.1:5050` Run a spark example: `./bin/spark-submit --class org.apache.spark.examples.SparkPi --master mesos://10.10.1.79:7078 --deploy-mode cluster --executor-memory 2G --total-executor-cores 2 http://<path>/spark-examples_2.11-2.1.1.jar 10` Sandbox uri is shown at the bottom of the page:  Redirection works as expected:  Author: Stavros Kontopoulos <st.kontopoulos@gmail.com> Closes #18528 from skonto/adds_the_sandbox_uri.

-

Xianyang Liu authored

## What changes were proposed in this pull request? We should reset numRecordsWritten to zero after DiskBlockObjectWriter.commitAndGet called. Because when `revertPartialWritesAndClose` be called, we decrease the written records in `ShuffleWriteMetrics` . However, we decreased the written records to zero, this should be wrong, we should only decreased the number reords after the last `commitAndGet` called. ## How was this patch tested? Modified existing test. Please review http://spark.apache.org/contributing.html before opening a pull request. Author: Xianyang Liu <xianyang.liu@intel.com> Closes #18830 from ConeyLiu/DiskBlockObjectWriter.

-

- Aug 06, 2017

-

-

Sean Owen authored

## What changes were proposed in this pull request? Remove duplicate test-jar:test spark-sql dependency from Hive module; move test-jar dependencies together logically. This generates a big warning at the start of the Maven build otherwise. ## How was this patch tested? Existing build. No functional changes here. Author: Sean Owen <sowen@cloudera.com> Closes #18858 from srowen/DupeSqlTestDep.

-

BartekH authored

I have discovered that "full_outer" name option is working in Spark 2.0, but it is not printed in exception. Please verify. ## What changes were proposed in this pull request? (Please fill in changes proposed in this fix) ## How was this patch tested? (Please explain how this patch was tested. E.g. unit tests, integration tests, manual tests) (If this patch involves UI changes, please attach a screenshot; otherwise, remove this) Please review http://spark.apache.org/contributing.html before opening a pull request. Author: BartekH <bartekhamielec@gmail.com> Closes #17985 from BartekH/patch-1.

-

actuaryzhang authored

## What changes were proposed in this pull request? Support offset in SparkR GLM #16699 Author: actuaryzhang <actuaryzhang10@gmail.com> Closes #18831 from actuaryzhang/sparkROffset.

-